- Webinars

The Future of Data Centre Electrical Grid Impacts

Data Centre Background

As new data centre developments expand rapidly across the United States to accommodate growth in IT-intensive sectors like artificial intelligence (AI), cloud computing, and e-commerce, they are reshaping electricity demand and presenting new challenges for grid planning and distribution system integration. These sectors require power-intensive servers, data storage, cooling systems and more. Understanding the drivers behind data centre load growth, the unique energy load profiles of data centres and their potential flexibility, and their integration with distribution systems is essential for utilities, policymakers, and industry leaders.

The following sections summarize Energeia’s latest research into data centre load growth, including the factors driving their development, strategies for efficient integration into grid infrastructure, and opportunities for increasing load flexibility and energy efficiency. The following sections

Drivers of Data Centre Growth

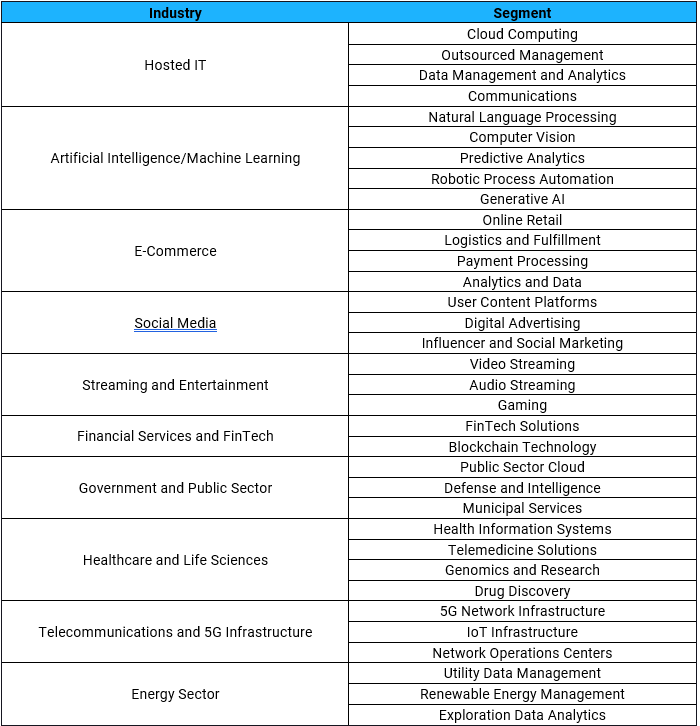

Generative AI, blockchain, social media, gaming, and virtual reality are among the top sectors driving data centre growth, as listed in Table 1. Each sector presents unique energy demands, from the computational intensity of AI training to the consistent, baseline uptime required by e-commerce platforms. These sector-specific characteristics influence energy intensity, synchronicity, and the right strategies for least-cost integration with the grid. The following section dives into detail for a selection of key industries driving data centre growth.

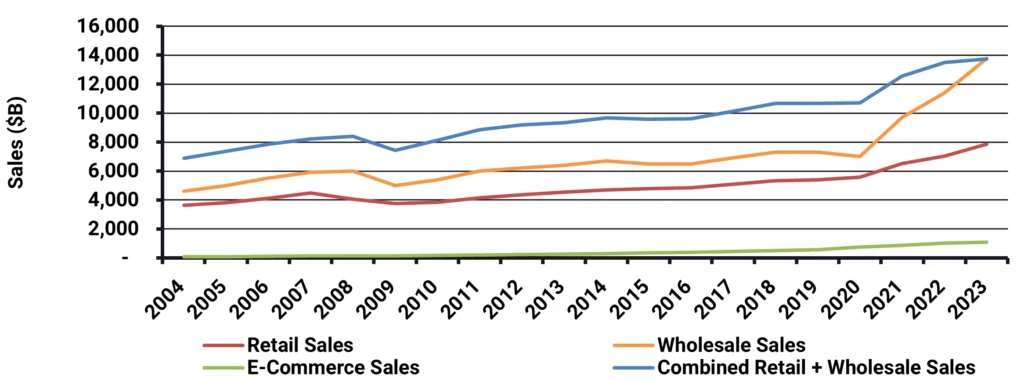

E-commerce, one of the oldest IT applications, has served as a foundational driver for cloud computing platforms like AWS. Initially growing in parallel with the U.S. economy until approximately 2010, the sector has since accelerated significantly. E-commerce exhibits notable load flexibility, due to factors such as inventory management, allowing for asynchronous operation. However, as transactions are continually digitized, Figure 1 suggests electricity demand in this sector could grow sevenfold over the next 10–20 years, assuming an 80% sales market saturation.

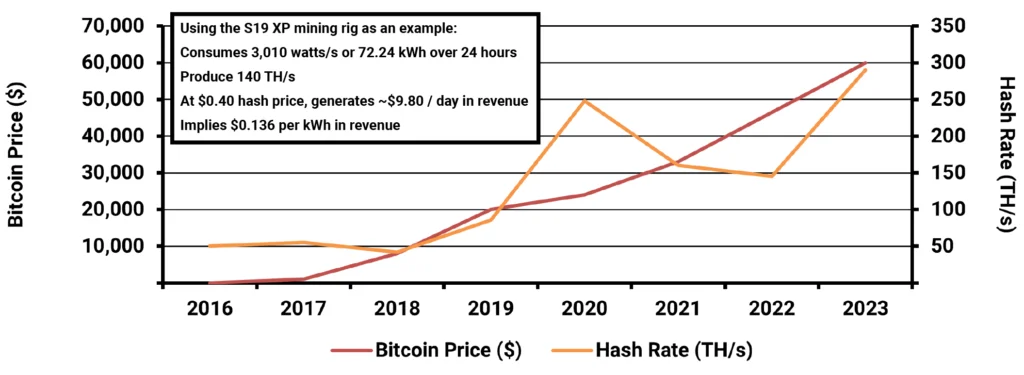

Crypto mining operations across the US are geographically ; the largest mining operations are not necessarily located in regions offering favorable electricity rates or land costs[1].

Utilities face challenges in predicting energy demand due to the volatile nature of cryptocurrency markets and the sporadic nature of mining operations.

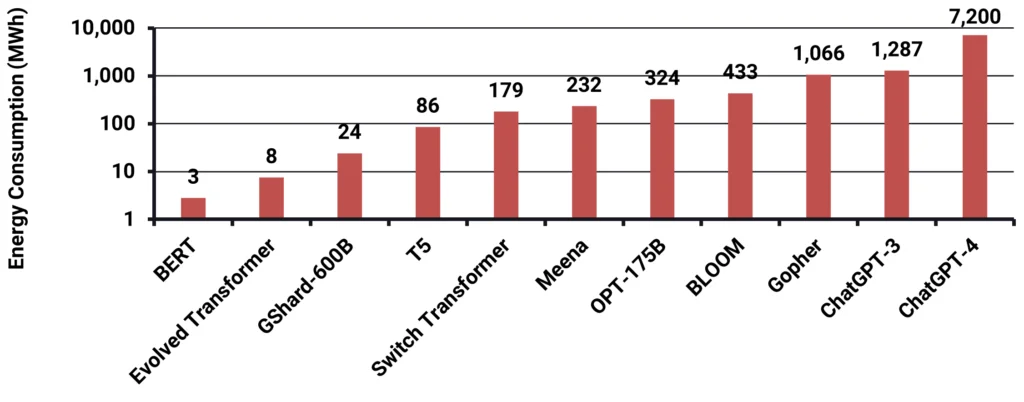

Artificial intelligence has grown exponentially in recent years as well, with model training consuming ten times the energy of typical cloud processes. Figure 3 shows OpenAI’s ChatGPT-4 consumes up to 7.2 GWh in its training processes.

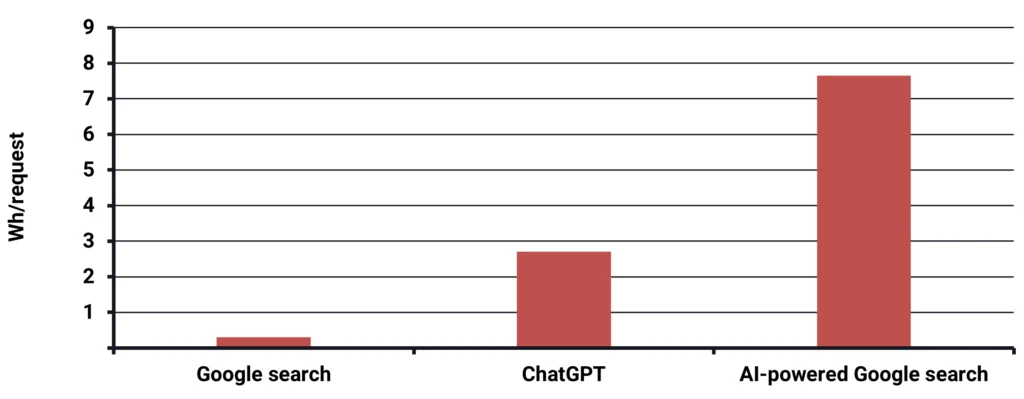

Similarly, Figure 4 shows that an AI-powered Google Search uses as much as 25x the energy as a typical Google Search. As AI becomes embedded in more applications, its power and energy requirements are projected to rise significantly. Data centres supporting AI training and utilization will require advanced infrastructure to balance real-time processing needs with grid constraints. Key questions facing the industry include:

- How many more commercial AI models will be trained

- Which industries will apply AI to automate processes at scale?

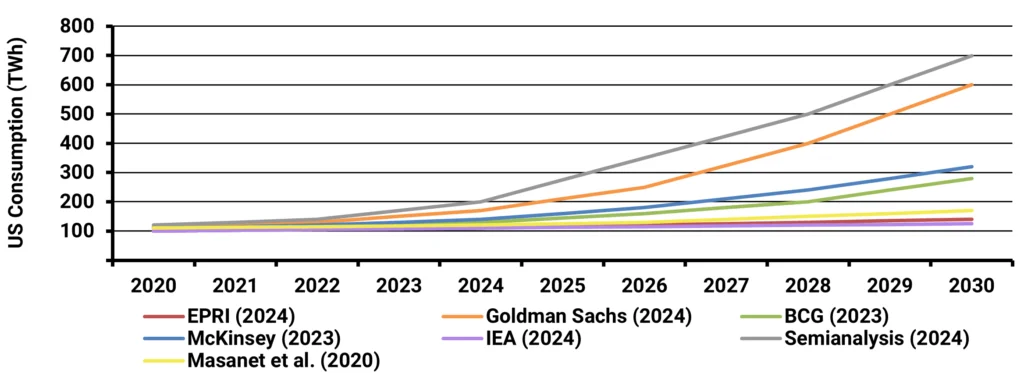

Forecasts for U.S. data centre energy consumption in 2030 vary widely, ranging from 120 TWh to over 600 TWh by 2030, as shown in Figure 5. This lack of consensus highlights the uncertainty in estimating data centre growth, driven by differing assumptions about efficiency improvements and sectoral expansion. Both top-down estimates based on historical growth rates and bottom-up forecast methods based on processor sales and power requirements have their limitations, further complicating grid system planning.

The following section helps demystify the details of data centre energy intensity, subloads, flexibility, energy efficiency options and more, providing insight into the tools required to develop a sound outlook for data centre growth.

Data Centre Subloads, Energy Efficiency, and Flexibility

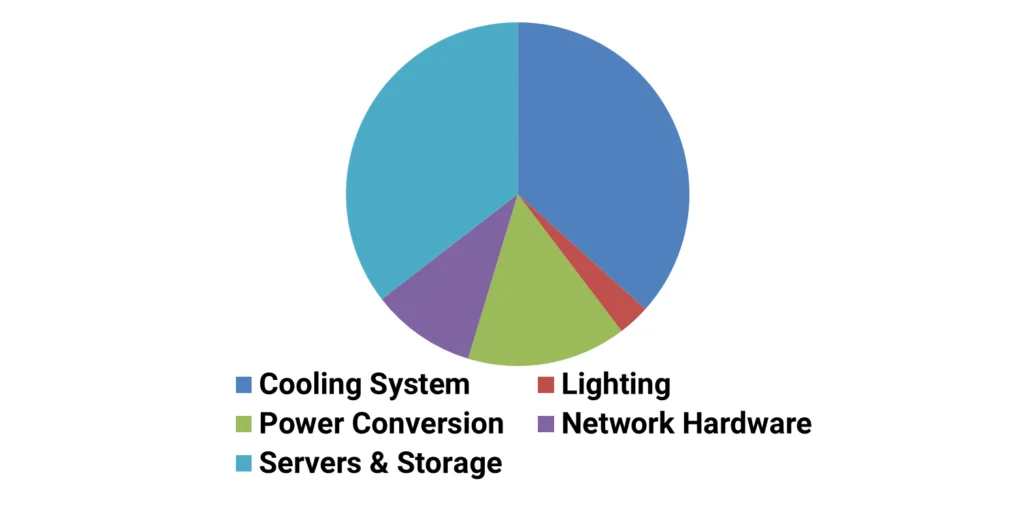

In a typical data centre today, more than 70% of energy is consumed by , servers, and storage, with power conversion and network hardware contributing to approximately 25% of load, while lighting typically accounts for less than 3%, as shown in Figure 6.

Listen or click through at your own pace

Power Usage Effectiveness (PUE), a key metric for non-IT load efficiency, measures the ratio of total data centre power usage to critical IT power, which includes servers, storage, and network hardware. While average PUEs fell substantially until 2013, progress has since plateaued. Industry leaders like NREL, Google, and Meta have achieved PUEs as low as 1.03 through innovations like liquid cooling, though the broader industry has yet to match these [2]

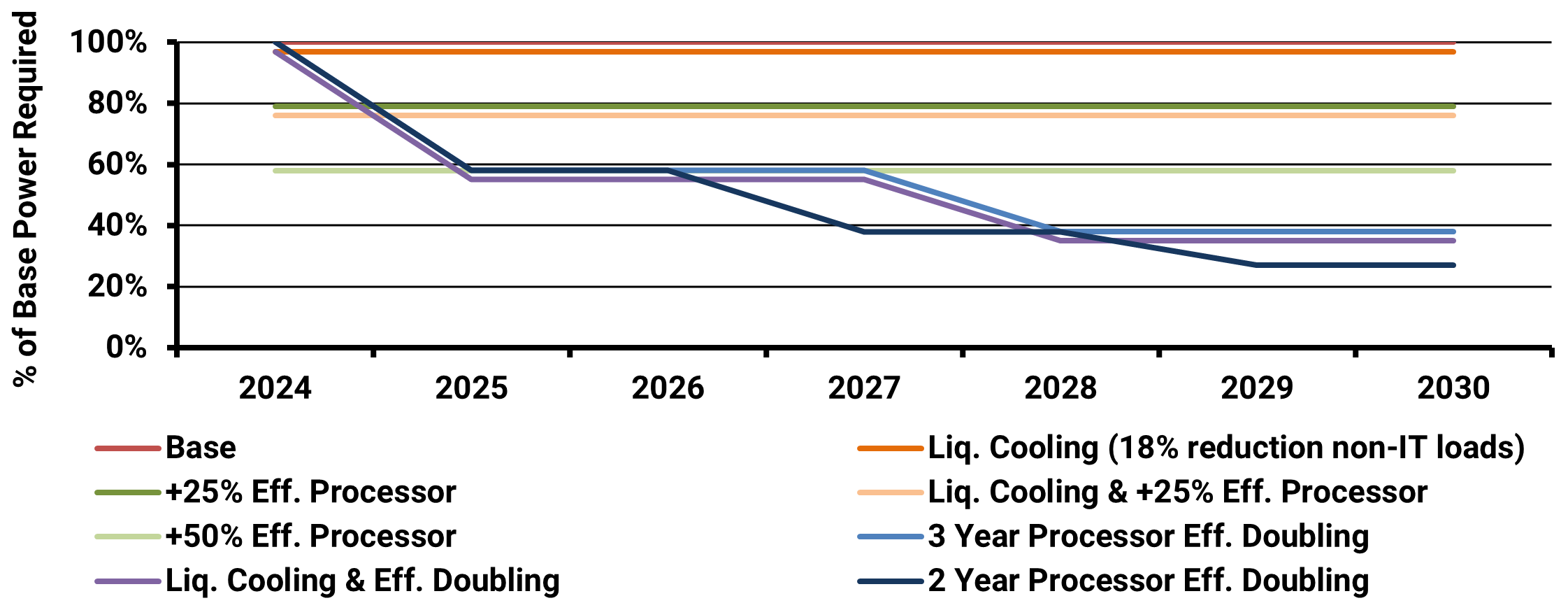

Figure 7 shows various outlooks for more efficient data centres relative to a base scenario of 100%, via both non-IT and processor efficiency improvements. It is critical to note that the specific processor and cooling technologies present in each data centre, or even server rack, will directly impact its power requirement.

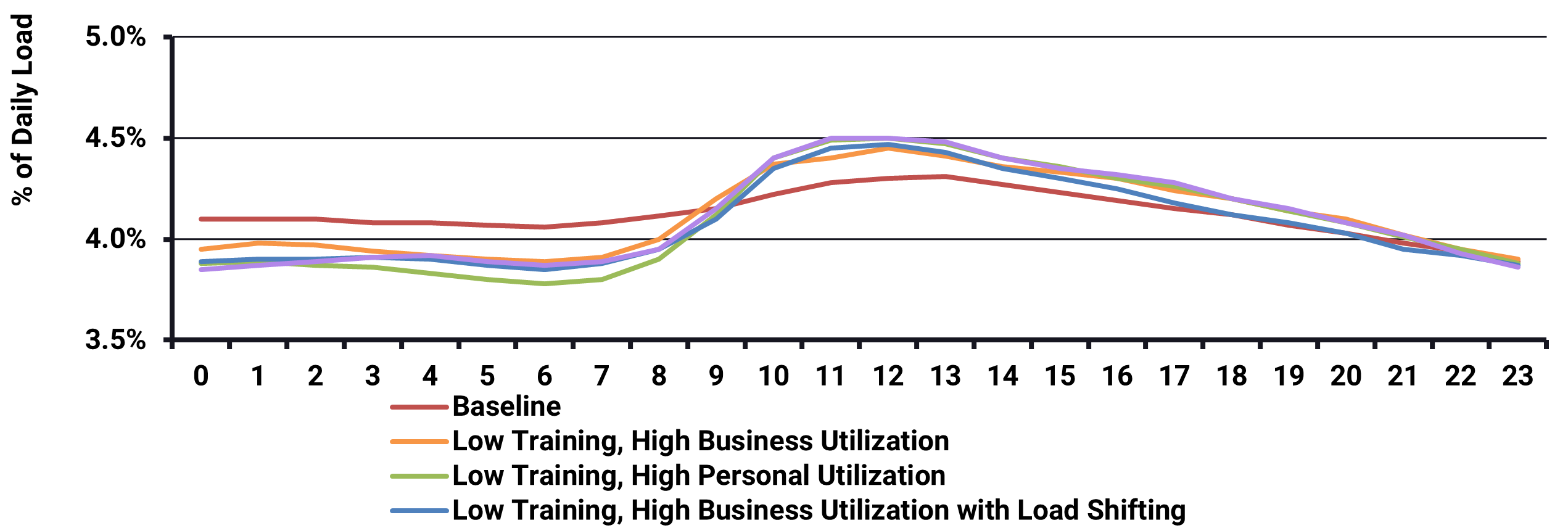

An estimated data centre load profile within the AI sector is shown in Figure 8, with varying levels of training, utilization, and load shifting. Flat, flexible load typically represents asynchronous processing, while synchronous processing drives profile shape.

The relatively flat profile below shows very little weather sensitivity in its cooling systems, and may only reflect critical IT loads. Other literature in data centre subload analysis, such as Ghatikar et. al (), suggests data centreload shapes include more weather sensitivity on daily and seasonal bases to maintain safe operational temperatures within these facilities[3].

Data on the actual amount of synchronous vs. asynchronous processing by IT-intensive sector is not widely available. Smart metering data analysis could provide key insight into the synchronicity of data centre load requirements and the associated flexibility, providing utilities with a clearer view of data centre load shapes and their coincidence with asset and system peak demands.

Data Centre Siting and Sizing

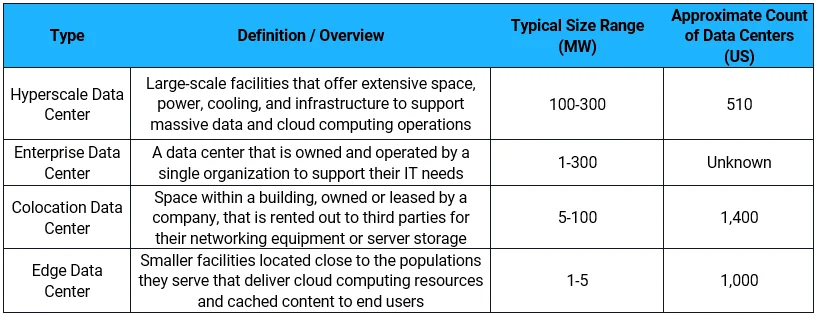

Data centres come in a wide variety of types, categorized into four main groups, as shown in Table 2. The largest hyperscale facilities are typically developed by major companies like Google and Meta. While their total capacity can exceed 300 MW, these centres are usually constructed in modular blocks of around 25 MW over time. This phased development approach is similar to that of traditional industrial.

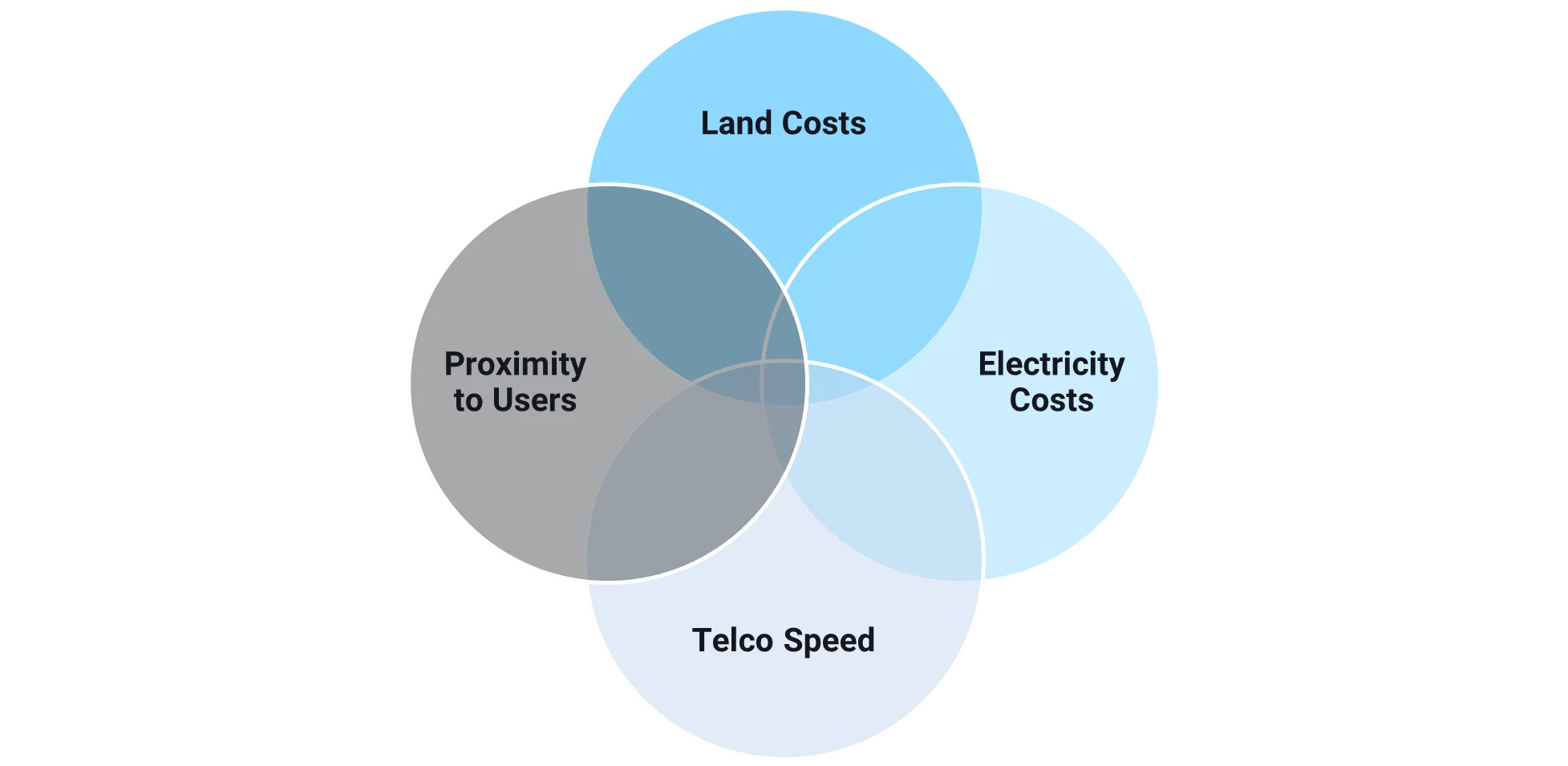

Key data centre hot spots in the US include Northern Virginia, Phoenix, Dallas, Atlanta, and Silicon Valley, with average data centres in these areas requiring from 5 up to 14 MW per site. Land and electricity costs drive the fundamental economics of siting, as telecommunications speeds and user proximity can dictate the type and load shape of data centres. Edge data centres are optimized for higher levels of synchronicity than hyperscale centres, and can be located in different proximity to end users. Figure 9 below summarizes the key factors impacting data centre siting.

Industry Levers for Least Cost Integration

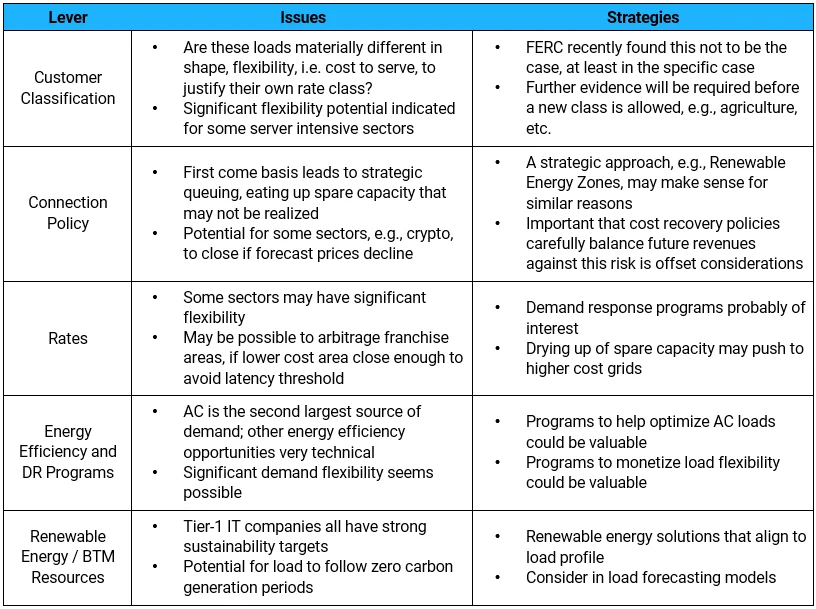

Load serving entities face significant risk in integrating data centres at least cost in the coming years. The following Table 3 describes a series of key potential levers for utility system planners to best manage data centre integration, from rate design to demand response programs, distributed renewable development and defining an entirely new customer class.

Takeaways and Recommendations

Energeia’s key takeaways and recommendations for integrating data centres into distribution systems (derived from Energeia’s best practice research and innovative analysis) are summarized below.

Key Takeaways:

- Growth in server intensive industries is uncertain, but the fundamentals suggest it has legs for at least the next 10 years

- Growth will be uneven, focused on areas near to major population centres, fiber links, low real estate and electricity costs

- New connections will vary in size, with the largest connections likely near to population centres (synchronous) or major fiber links with low-cost land and electricity (asynchronous) – the latter is for overflow only after load sharing

- A significant portion of the load seems likely to reflect underlying economic and demographic patterns

- There is still significant potential for energy efficiency to reduce consumption per compute/storge activity, with AC and standby power opportunities well

Key Recommendations:

- Determine the nature of your utility’s likely share of IT intensive industry load, to allocate appropriate levels of effort:

- How close are you to population or business centres?

- How good is your fiber connectivity?

- How low are your land and electricity prices?

- How much spare capacity do you have the in medium voltage and sub-transmission networks in areas of low land prices, connected to the fiber optic backbone?

- Consider opportunities for strategic planning and connection policies, e.g. like for renewable energy

- Be proactive with cost reflective rates and associated demand response programs, best practice here does not yet exist

[1]Tracking electricity consumption from U.S. cryptocurrency mining operations (2024), Tracking electricity consumption from U.S. cryptocurrency mining operations – U.S. Energy Information Administration (EIA)

[2]High-Performance Computing Data Center, High-Performance Computing Data Center | Computational Science | NREL

[3]Demand Response and Open Automated Demand Response Opportunities for Data Centers (2010), Demand Response and Open Automated Demand Response Opportunities for Data Centers

For more detailed information regarding the key challenges of analysing and optimising electrification, best practice methods, and insight into their implementation and implications, please see Energeia’s webinar and associated materials.

For more information or to discuss your specific needs, please request a meeting with our team.

You may also like

Bridging the Skills Gap: Workforces for Electrification

Australia’s clean energy transition demands a skilled workforce. Energeia’s analysis reveals urgent needs, strategic solutions, and policy pathways to bridge the electrification skills gap and

Unlocking the Potential of Consumer Energy Resources

The AEMC partnered with Energeia to explore how flexible Consumer Energy Resources (CER), like solar, batteries, electric vehicles, and smart appliances, can reduce costs and

Optimizing DC Fast Charging Tariff Structures

Energy Queensland collaborated with Energeia to address financial barriers in EV charging infrastructure, focusing on high network tariffs and demand charges. The study evaluated alternative